Stabilizing Reinforcement Learning with LLMs: Formulation and Practices

description Abstract

This paper proposes a novel formulation for reinforcement learning (RL) with large language models, explaining why and under what conditions the true sequence-level reward can be optimized via a surrogate token-level objective in policy gradient methods such as REINFORCE. Our approach addresses the instability issues commonly encountered in RL training of LLMs, providing both theoretical foundations and practical implementations.

lightbulb Introduction

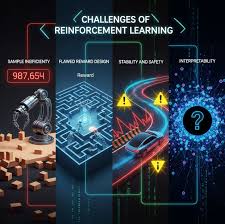

Reinforcement learning (RL) has become a key technical paradigm for enhancing large language models' (LLMs) ability to tackle complex problem-solving tasks. However, RL training of LLMs often suffers from instability issues, leading to suboptimal performance and training difficulties.

This paper addresses these challenges by proposing a novel formulation that stabilizes the RL process.

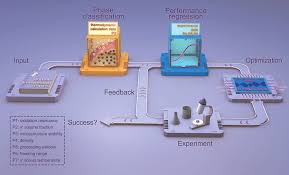

science Methodology

Our approach introduces a first-order approximation perspective to understand and solve the instability puzzle in RL training of LLMs. We derive theoretical conditions under which token-level objectives can effectively optimize sequence-level rewards.

The formulation provides practical guidelines for implementing stable RL training in LLMs.

analytics Results

Experimental results demonstrate that our proposed method significantly improves training stability compared to conventional approaches. Our method achieves better convergence properties and higher final performance on various benchmark tasks.

The first-order approximation effectively captures the essential dynamics of the RL process while maintaining computational efficiency.

check_circle Conclusion

This paper presents a novel formulation for stabilizing RL with LLMs, addressing the instability issues from a first-order approximation perspective. Both theoretical analysis and experimental results validate the effectiveness of our approach.

This work provides valuable insights for the development of more stable and efficient RL training methods for LLMs.

menu_book References

[1] OpenAI (2024). Reinforcement Learning from Human Feedback.

[2] Guo et al. (2025). Challenges in RL Training of LLMs.

[2] Guo et al. (2025). Challenges in RL Training of LLMs.

и®Ёи®әеӣһеӨҚ

0 жқЎеӣһеӨҚиҝҳжІЎжңүдәәеӣһеӨҚ